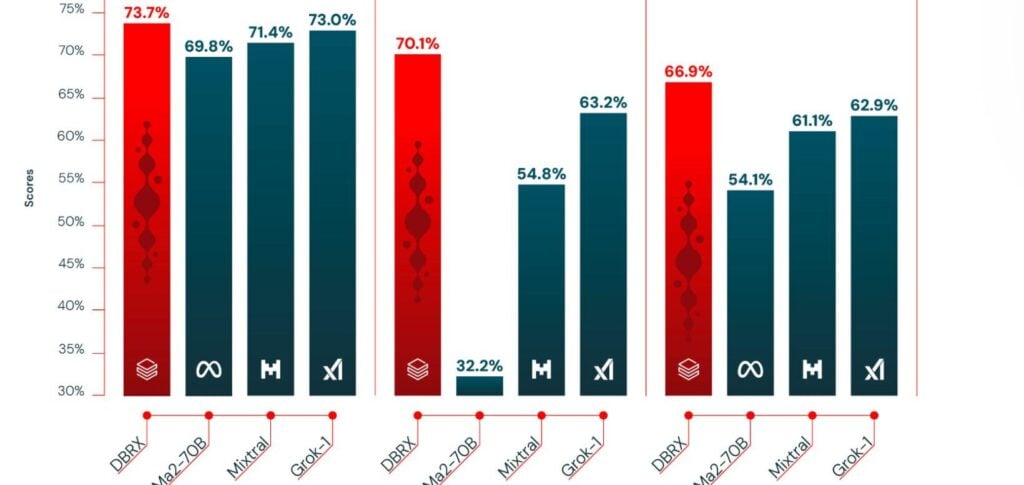

O DBX outperforms leading open source models such as Mixtral MoE, Llama-2 70B and Grok-1, in understanding language, programming and mathematics.

ADVERTISING

Additionally, its inference is up to 2x faster than LLaMA2-70B and is about 40% the size of Grok-1 in total and active parameter counts.

The model also demonstrates superiority over GPT-3.5 in long context tasks and RAG benchmarks.

Surprisingly, it only took $10 million and two months to train DBRX from scratch, highlighting its efficiency and effectiveness in developing language models.

ADVERTISING

Databricks' creation of a GPT 3.5-level model with just $10 million and two months sets a new standard for the industry. However, while topping the open source leaderboard is impressive, the imminent arrival of the company's highly anticipated Llama 3 Meta suggests the model is unlikely to remain at the top for long.

Read also

* The text of this article was partially generated by artificial intelligence tools, state-of-the-art language models that assist in the preparation, review, translation and summarization of texts. Text entries were created by the Curto News and responses from AI tools were used to improve the final content.

It is important to highlight that AI tools are just tools, and the final responsibility for the published content lies with the Curto News. By using these tools responsibly and ethically, our objective is to expand communication possibilities and democratize access to quality information. 🤖

Looking for an Artificial Intelligence tool to make your life easier? In this guide, you browse a catalog of AI-powered robots and learn about their functionalities. Check out the evaluation that our team of journalists gave them!

ADVERTISING