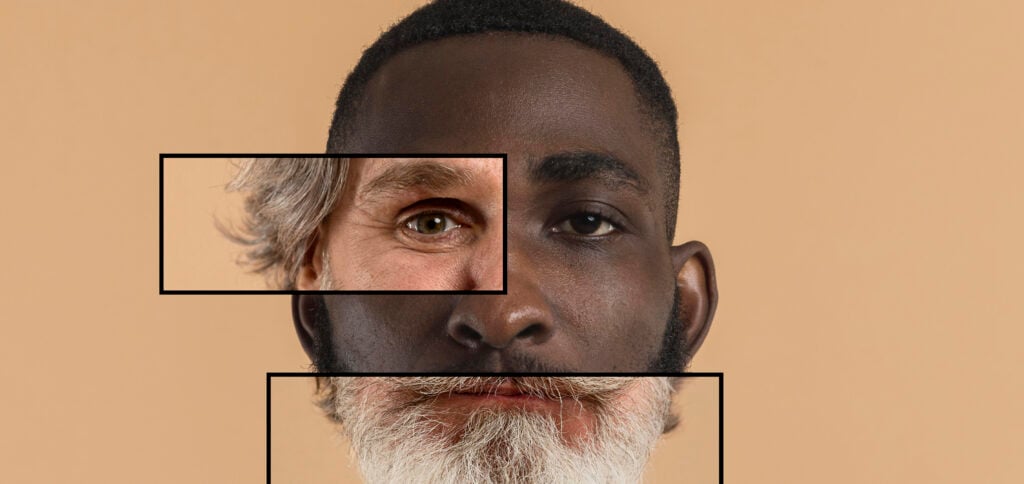

Most detectors deepfake is based on a learning strategy that depends heavily on the dataset used for its training. They then use AI to detect signals that may not be clear to the human eye.

ADVERTISING

This may include monitoring blood flow and heart rate. However, these detection methods do not always work on people with darker skin tones and, If training sets do not contain all ethnicities, accents, genders, ages, and skin tones, they are subject to bias, experts warned.

Bias being built

Over the past two years, concerns have been raised by experts in AI and deepfake detection who say bias is being built into these systems.

Rijul Gupta, synthetic media expert and co-founder and CEO of DeepMedia, which uses AI and machine learning to evaluate visual and audio cues for signs of synthetic manipulation, said: “Data sets are always heavily skewed toward middle-aged white men, and this type of technology always negatively impacts marginalized communities".

ADVERTISING

Monk Skin Tone Scale

Ellis Monk, professor of sociology at Harvard University and visiting scholar at the Google, developed the Monk Skin Tone Scale.

It's a more inclusive scale than the tech industry standard and will provide a broader spectrum of skin tones that can be used for datasets and machine learning models.

Monk said: “Darker-skinned people have been excluded from how these different forms of technology have been developed from the beginning. You need to build new datasets that have more coverage, more representation in terms of skin tone and that means you need some kind of measure that is standardized, consistent and more representative than previous scales.”

ADVERTISING

Read also

* The text of this article was partially generated by artificial intelligence tools, state-of-the-art language models that assist in the preparation, review, translation and summarization of texts. Text entries were created by the Curto News and responses from AI tools were used to improve the final content.

It is important to highlight that AI tools are just tools, and the final responsibility for the published content lies with the Curto News. By using these tools responsibly and ethically, our objective is to expand communication possibilities and democratize access to quality information. 🤖