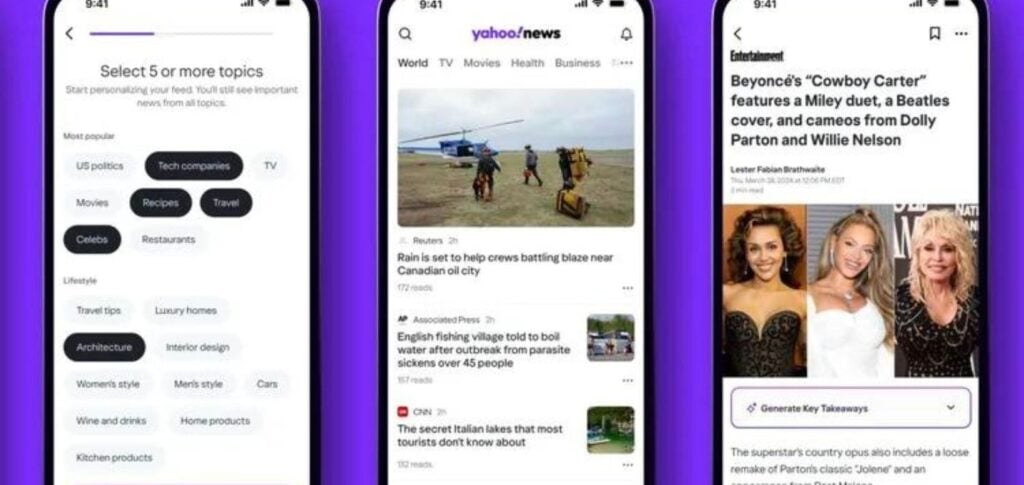

According to what was published by 404 Media, 4chan and Telegram users took advantage of a security breach in Designer to generate some of the deepfakes de Taylor Swift and other celebrities.

ADVERTISING

A Microsoft sent a statement to 404 Media: “Our Code of Conduct prohibits the use of our tools to create non-consensual adult or intimate content, and any repeated attempts to produce content that contravenes our policies may result in loss of access to the service.”

The company also said that it was not possible to prove whether the images were actually created in Designer, but reinforced the role of “large teams in the development of guardrails and other security systems aligned with our principles of responsible AI, including content filtering, operational monitoring and abuse detection to mitigate system misuse and help create a safer environment for users.”

Read also